[1]:

%run ../initscript.py

HTML("""

<div id="popup" style="padding-bottom:5px; display:none;">

<div>Enter Password:</div>

<input id="password" type="password"/>

<button onclick="done()" style="border-radius: 12px;">Submit</button>

</div>

<button onclick="unlock()" style="border-radius: 12px;">Unclock</button>

<a href="#" onclick="code_toggle(this); return false;">show code</a>

""")

[1]:

A Short Introduction to AI¶

Symbolic AI¶

Artificial intelligence was proposed by a handful of pioneers from the nascent field of computer science in the 1950s. A concise definition of the field would be as follows: the effort to automate intellectual tasks normally performed by humans.

For a fairly long time, many experts believed that human-level artificial intelligence could be achieved by having programmers handcraft a sufficiently large set of explicit rules for manipulating knowledge. This approach is known as symbolic AI and was the dominant paradigm in AI from the 1950s to the late 1980s.

In the 1960s, people believe that “the problem of creating artificial intelligence will substantially be solved within a generation”. As these high expectations failed to materialize, researchers and government funds turned away from the field, marking the start of the first AI winter.

Expert Systems¶

In the 1980s, a new take on symbolic AI, expert systems, started gathering steam among large companies. A few initial success stories triggered a wave of investment. Around 1985, companies were spending over $1 billion each year on the technology; but by the early 1990s, these systems had proven expensive to maintain, difficult to scale, and limited in scope, and interest died down. Thus began the second AI winter.

Deep Learning: AI Hype?¶

Although some world-changing applications like autonomous cars are already within reach, many more are likely to remain elusive for a long time, such as believable dialogue systems, human-level machine translation across arbitrary languages, and human-level natural-language understanding. In particular, talk of human-level general intelligence shouldn’t be taken too seriously. The risk with high expectations for the short term is that, as technology fails to deliver, research investment will dry up, slowing progress for a long time.

Although we’re still in the phase of intense optimism, we may be currently witnessing the third cycle of AI hype and disappointment.

Machine Learning¶

In classical programming, such as symbolic AI, humans input rules (a program) and data to be processed according to these rules, and out come answers:

\begin{equation} \text{rules $+$ data} \Rightarrow \text{classical programming} \Rightarrow \text{answers} \nonumber \end{equation}

For example, an Expert System contains two main components: an inference engine and a knowledge base.

Expert systems require a real human expert to input knowledge (such as all steps s/he took to make the decision, and how to handle exceptions) into the knowledge base, whereas in machine learning, no such “expert” is needed.

The inference engine applies logical rules based on facts from the knowledge base. These rules are typically in the form of if-then statements. A flexible system would use the knowledge as an initial guide, and use the expert’s guidance to learn, based on feedback from the expert.

Machine learning arises from the question that could a computer go beyond “what we know how to order it to perform” and learn on its own how to perform a specified task? A machine-learning system is trained rather than explicitly programmed. The programming paradigm is quite different

\begin{equation} \text{data $+$ answers} \Rightarrow \text{machine learning} \Rightarrow \text{rules} \nonumber \end{equation}

Machine learning is a type of artificial intelligence. It can be broadly divided into supervised, unsupervised, self-supervised and reinforcement learning.

In supervised learning, a computer is given a set of data and an expected result, and asked to find relationships between the data and the result. The computer can then learn how to predict the result when given new data. It’s by far the dominant form of deep learning today.

In unsupervised learning, a computer has data to play with but no expected result. It is asked to find relationships between entries in the dataset to discover new patterns.

Self-supervised learning is supervised learning without human-annotated labels such as autoencoders.

In reinforcement learning, an agent receives information about its environment and learns to choose actions that will maximize some reward. Currently, reinforcement learning is mostly a research area and hasn’t yet had significant practical successes beyond games.

Machine learning started to flourish in the 1990s and has quickly become the most popular and most successful subfield of AI.

Deep Learning¶

Deep learning is a specific subfield of machine learning: a new take on learning information from data that puts an emphasis on learning successive layers of increasingly meaningful representations.

The “deep” in deep learning

it isn’t a reference to any kind of deeper understanding achieved by the approach;

it stands for the idea of successive layers of representations.

Shallow learning is referring to approaches in machine learning that focus on learning only one or two layers of representations of the data.

See the deep representations learned by a 4-layer neural network for digit number 4.

The Promise¶

Although we may have unrealistic short-term expectations for AI, the long-term picture is looking bright. We’re only getting started in applying deep learning in real-world applications. Right now, it may seem hard to believe that AI could have a large impact on our world, because it isn’t yet widely deployed — much as, back in 1995, it would have been difficult to believe in the future impact of the internet.

Don’t believe the short-term hype, but do believe in the long-term vision. Deep learning has several properties that justify its status as an AI revolution:

Simplicity: Deep learning removes the need for many heavy-duty engineering preprocessing.

Scalability: Deep learning is highly amenable to parallelization on GPUs or TPUs. Deep-learning models are trained by iterating over small batches of data, allowing them to be trained on datasets of pretty much arbitrary size.

Versatility and reusability: deep-learning models can be trained on additional data without restarting from scratch. Trained deep-learning models are repurposable. For instance, it’s possible to take a deep-learning model trained for image classification and drop it into a video processing pipeline.

Deep learning has only been in the spotlight for a few years, and we haven’t yet established the full scope of what it can do.

Neural Network Structures¶

Tensors are fundamental to the data representations for neural networks — so fundamental that Google’s TensorFlow was named after them.

Scalars: 0 dimensional tensors

Vectors: 1 dimensional tensors

Matrix: 2 dimensional tensors

Let’s make data tensors more concrete with real-world examples:

Vector data — 2D tensors of shape (samples, features)

Timeseries data or sequence data — 3D tensors of shape (samples, timesteps, features)

Images — 4D tensors of shape (samples, height, width, channels) or (samples, channels, height, width)

Video — 5D tensors of shape (samples, frames, height, width, channels) or (samples, frames, channels, height, width)

There are mainly three families of network architectures that are densely connected networks, convolutional networks, and recurrent networks. A network architecture encodes assumptions about the structure of the data.

A densely connected network is a stack of Dense layers and assume no specific structure in the input features.

Convnets, or convolutional networks (CNNs), consist of stacks of convolution and max-pooling layers. Convolution layers look at spatially local patterns by applying the same geometric transformation to different spatial locations (patches) in an input tensor.

Recurrent neural networks (RNNs) work by processing sequences of inputs one time step at a time and maintaining a state throughout

Prediction v.s. Decision¶

What is the capital of Delaware?

[2]:

hide_answer()

[2]:

A machine called Alexa says the correct answer: “The capital of Delaware is Dover.”

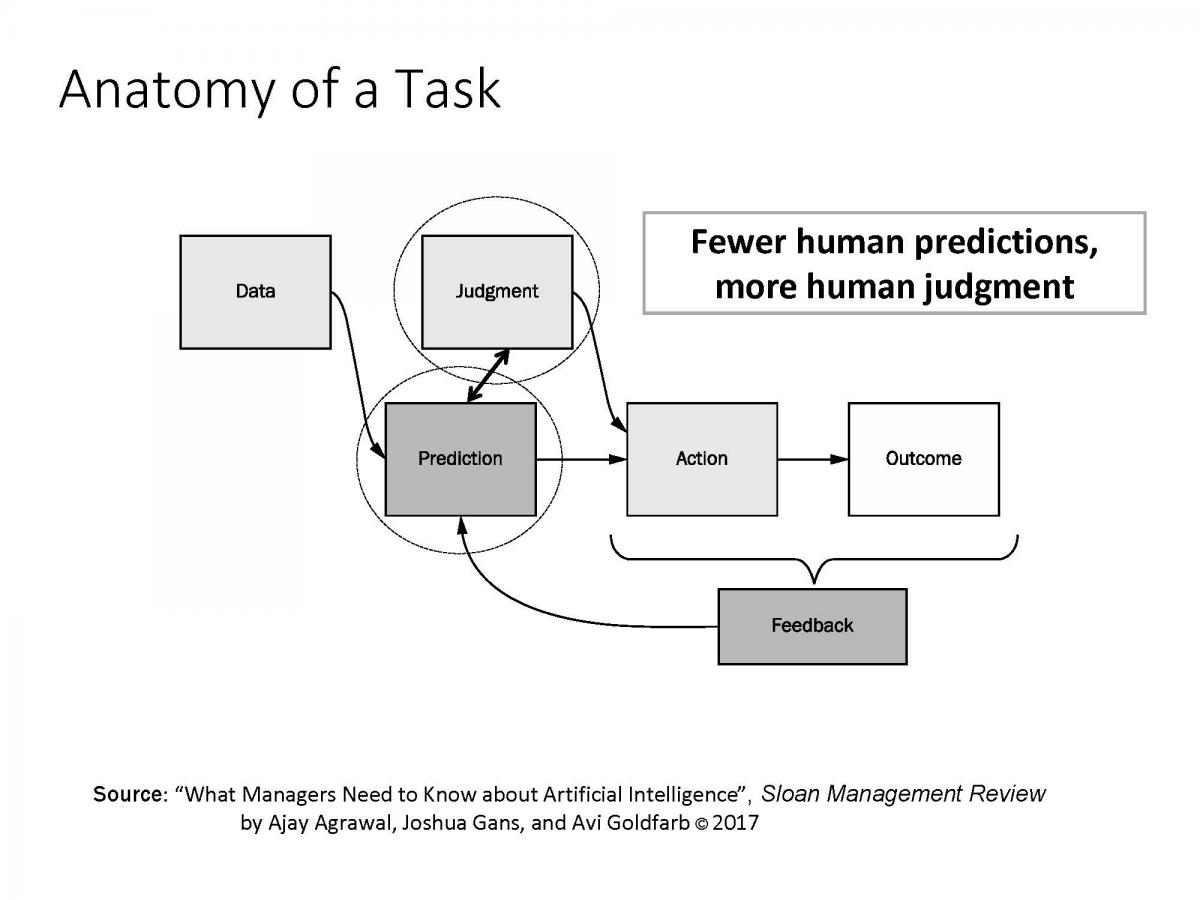

The new wave of artificial intelligence does not actually bring us intelligence but instead a critical component of intelligence — prediction.

What Alexa was doing when we asked a question was taking the sounds it heard and predicting the words we spoke and then predicting what information the words were looking for.

Alexa doesn’t “know” the capital of Delaware. But Alexa is able to predict that, when people ask such a question, they are looking for a specific response: Dover.

What is the difference between judgment and prediction?

[3]:

hide_answer()

[3]:

In the movie “I, Robot.”, there’s one scene that makes it very clear what this distinction between prediction and judgment is.

Will Smith is the star of the movie and he has a flashback scene where he’s in a car accident with a 12-year-old girl. And they’re drowning and then a robot arrives, somehow miraculously, and can save one of them.

The robot apparently makes this calculation that Will Smith has a 45% chance of survival and the girl only had an 11% chance. And therefore, the robot saves Will Smith.

Will Smith concludes that the robot made the wrong decision. 11% was more than enough. A human being would have known that.

So that’s all well and good and he’s assuming that the robot values his life and the girl’s life the same. But in order for the robot to make a decision, it needs the prediction on survival and a statement about how much more valuable the girl’s life has to be than Will Smith’s life in order to choose.

This decision that we’ve seen, all it says is Will Smith’s life is worth at least a quarter of the girl’s life. That valuation decision matters, because at some point even Will Smith would disagree with this. At some point, if her chance of survival was 1%, or 0.1%, or 0.01%, that decision would flip. That’s judgment. That’s knowing what to do with the prediction once you have one.

So judgment is the process of determining what the reward is to a particular action in a particular environment. Decision analysis tools (such as optimization and simulation) can be used for balancing the reward and cost (or risk).

We need to understand the consequences of cheap prediction and its importance in decision-making,